class property self.model. The dataset will eventually run out of data (unless it is an Now that we have the neural network prediction, we apply a softmax function on the output of the neural network in_nn and then extract the class label and confidence score from the resulting data. We first learned the model deployment process for OAK; specifically, we discussed the process of taking the deep learning trained model in various frameworks (e.g., TensorFlow, PyTorch, Caffe) to the intermediate representation to the .blob format supported by the OAK device. The confidence of that prediction is simply the probability of the top item. By clicking Sign up for GitHub, you agree to our terms of service and But what 0. It was originally developed by Google. Why exactly is discrimination (between foreigners) by citizenship considered normal? Aditya Sharma is a Computer Vision and Natural Language Processing research engineer working at Robert Bosch. For large samples sizes (which is quite common in ML) it is generally safe ti assume that. you could use Model.fit(, class_weight={0: 1., 1: 0.5}). Asking for help, clarification, or responding to other answers. 0. To follow this guide, you need to have depthai, opencv, and imutils installed on your system. Great! JarvisLabs provides the best-in-class GPUs, and PyImageSearch University students get between 10-50 hours on a world-class GPU (time depends on the specific GPU you select). Before we start our implementation, lets review our projects configuration pipeline. How can I implement confidence level in a CNN with tensorflow? For example, for security, traffic management, manufacturing, healthcare, and agriculture applications, a coin-size edge device like OAK-D can be a great hardware to deploy your deep learning models. This section also describes the confidence of the model overall. TensorFlow Lite for mobile and edge devices, TensorFlow Extended for end-to-end ML components, Pre-trained models and datasets built by Google and the community, Ecosystem of tools to help you use TensorFlow, Libraries and extensions built on TensorFlow, Differentiate yourself by demonstrating your ML proficiency, Educational resources to learn the fundamentals of ML with TensorFlow, Resources and tools to integrate Responsible AI practices into your ML workflow, Stay up to date with all things TensorFlow, Discussion platform for the TensorFlow community, User groups, interest groups and mailing lists, Guide for contributing to code and documentation, Tune hyperparameters with the Keras Tuner, Warm start embedding matrix with changing vocabulary, Classify structured data with preprocessing layers. epochs. scratch, see the guide Helps create the pipeline for inference on OAK with images, Pipeline for inference on OAK with color camera stream, Define a softmax function to convert predictions into probabilities and a function to resize input and swap channel dimensions. drawing the next batches. The deep learning model could be in any format like PyTorch, TensorFlow, or Caffe, depending on the framework where the model was trained.

So join PyImageSearch University today and try it for yourself. Acknowledging too many people in a short paper? How to find the confidence level of a classification? The pipeline object returned by the function is assigned to the variable, It would create a pipeline that is ready to process images and perform inference using the, Next, the function extracts the class label by getting the index of the maximum probability and then using it to look up the corresponding label in the. distribution over five classes (of shape (5,)). Well occasionally send you account related emails. why did kim greist retire; sumac ink recipe; what are parallel assessments in education; baylor scott and white urgent care returns the frame to the calling function. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. Sign up for a free GitHub account to open an issue and contact its maintainers and the community. As a deep learning engineer or practitioner, you may be working in a team building a product that requires you to train deep learning models on a specific data modality (e.g., computer vision) on a daily basis. So it say that I think that real response is lie in [20-5, 20+5] but to really understand what does it mean, we need to understand real phenomen and mathematical model. to your account. With the frame and neural network data queues defined and the frame postprocessing helper function in place, we start the while loop on Line 45. 4: Bootstrap. For a quick illustration, see the Wikipedia page under Generalization and Statistics. It's good practice to use a validation split when developing your model. You're already using softmax in the set-up; just use it on the final vector to convert it to RMS probabilities. Thanks for contributing an answer to Cross Validated! That said, you might want to look into Michael Feindt's NeuroBayes algorithm, which uses a Bayesian approach to forecast predictive densities. Processed TensorFlow files are available from the indicated URLs. WebIn our application we do as you have proposed: set score threshold to something low (even 0.1) and filter on the number of frames in which the object was detected. How much hissing should I tolerate from old cat getting used to new cat? The converted blob file would then run image classification inference on the OAK-D using the DepthAI API. I got a database of 50 photos, used this video to get me started, and it DID work with Google's Sample Model (I'm using a RPi4B with 8 GB of RAM), then I wanted to create my own model. However, as far as I know, Conformal Prediction (CP) is the only principled method for building calibrated PI for prediction in nonparametric regression and classification problems. If you do this, the dataset is not reset at the end of each epoch, instead we just keep It's actually quite easy to do it with Bayesian Deep Learning.

So join PyImageSearch University today and try it for yourself. Acknowledging too many people in a short paper? How to find the confidence level of a classification? The pipeline object returned by the function is assigned to the variable, It would create a pipeline that is ready to process images and perform inference using the, Next, the function extracts the class label by getting the index of the maximum probability and then using it to look up the corresponding label in the. distribution over five classes (of shape (5,)). Well occasionally send you account related emails. why did kim greist retire; sumac ink recipe; what are parallel assessments in education; baylor scott and white urgent care returns the frame to the calling function. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. Sign up for a free GitHub account to open an issue and contact its maintainers and the community. As a deep learning engineer or practitioner, you may be working in a team building a product that requires you to train deep learning models on a specific data modality (e.g., computer vision) on a daily basis. So it say that I think that real response is lie in [20-5, 20+5] but to really understand what does it mean, we need to understand real phenomen and mathematical model. to your account. With the frame and neural network data queues defined and the frame postprocessing helper function in place, we start the while loop on Line 45. 4: Bootstrap. For a quick illustration, see the Wikipedia page under Generalization and Statistics. It's good practice to use a validation split when developing your model. You're already using softmax in the set-up; just use it on the final vector to convert it to RMS probabilities. Thanks for contributing an answer to Cross Validated! That said, you might want to look into Michael Feindt's NeuroBayes algorithm, which uses a Bayesian approach to forecast predictive densities. Processed TensorFlow files are available from the indicated URLs. WebIn our application we do as you have proposed: set score threshold to something low (even 0.1) and filter on the number of frames in which the object was detected. How much hissing should I tolerate from old cat getting used to new cat? The converted blob file would then run image classification inference on the OAK-D using the DepthAI API. I got a database of 50 photos, used this video to get me started, and it DID work with Google's Sample Model (I'm using a RPi4B with 8 GB of RAM), then I wanted to create my own model. However, as far as I know, Conformal Prediction (CP) is the only principled method for building calibrated PI for prediction in nonparametric regression and classification problems. If you do this, the dataset is not reset at the end of each epoch, instead we just keep It's actually quite easy to do it with Bayesian Deep Learning.  When the weights used are ones and zeros, the array can be used as a mask for privacy statement. In fact, this is even built-in as the ReduceLROnPlateau callback. I find that a simple method is MC dropout. Asking for help, clarification, or responding to other answers. Brand new courses released every month, ensuring you can keep up with state-of-the-art techniques

The IR consists of the model configuration in. The Keras Sequential model consists of three convolution blocks (tf.keras.layers.Conv2D) with a max pooling layer (tf.keras.layers.MaxPooling2D) in each of them. So to derive something please use various applied and fundamental science: Use control (and make assumption about dynamics), Use convex optimization (with some extra condition on function), Use math statistics (with preliminary assumptions on distributions), Use signal processing (with some assumptions that signal is band limited). It's so much cheaper.

When the weights used are ones and zeros, the array can be used as a mask for privacy statement. In fact, this is even built-in as the ReduceLROnPlateau callback. I find that a simple method is MC dropout. Asking for help, clarification, or responding to other answers. Brand new courses released every month, ensuring you can keep up with state-of-the-art techniques

The IR consists of the model configuration in. The Keras Sequential model consists of three convolution blocks (tf.keras.layers.Conv2D) with a max pooling layer (tf.keras.layers.MaxPooling2D) in each of them. So to derive something please use various applied and fundamental science: Use control (and make assumption about dynamics), Use convex optimization (with some extra condition on function), Use math statistics (with preliminary assumptions on distributions), Use signal processing (with some assumptions that signal is band limited). It's so much cheaper.  How is cursor blinking implemented in GUI terminal emulators? Do you observe increased relevance of Related Questions with our Machine Output the confiendence / probability for a class of a CNN neuronal network. But notice that these probabilities are produced by the model, and they might be overconfident unless you use a model that produces calibrated probabilities (like a Bayesian Neural Network). Learning on your employers administratively locked system? How will Conclave Sledge-Captain interact with Mutate? Let's consider the following model (here, we build in with the Functional API, but it See for example. This will take you from a directory of images on disk to a tf.data.Dataset in just a couple lines of code. Plagiarism flag and moderator tooling has launched to Stack Overflow! Create a new neural network with tf.keras.layers.Dropout before training it using the augmented images: After applying data augmentation and tf.keras.layers.Dropout, there is less overfitting than before, and training and validation accuracy are closer aligned: Use your model to classify an image that wasn't included in the training or validation sets. Moreover, sometimes these networks do not even fit (run) on a CPU. In this case, the image classifier model will classify objects in the images. On Line 21, we start to iterate over the list of image paths stored in the config.TEST_DATA.

How is cursor blinking implemented in GUI terminal emulators? Do you observe increased relevance of Related Questions with our Machine Output the confiendence / probability for a class of a CNN neuronal network. But notice that these probabilities are produced by the model, and they might be overconfident unless you use a model that produces calibrated probabilities (like a Bayesian Neural Network). Learning on your employers administratively locked system? How will Conclave Sledge-Captain interact with Mutate? Let's consider the following model (here, we build in with the Functional API, but it See for example. This will take you from a directory of images on disk to a tf.data.Dataset in just a couple lines of code. Plagiarism flag and moderator tooling has launched to Stack Overflow! Create a new neural network with tf.keras.layers.Dropout before training it using the augmented images: After applying data augmentation and tf.keras.layers.Dropout, there is less overfitting than before, and training and validation accuracy are closer aligned: Use your model to classify an image that wasn't included in the training or validation sets. Moreover, sometimes these networks do not even fit (run) on a CPU. In this case, the image classifier model will classify objects in the images. On Line 21, we start to iterate over the list of image paths stored in the config.TEST_DATA.  Yarin Gal disagrees with the accepted answer. the Dataset API. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. If you want to modify your dataset between epochs, you may implement on_epoch_end. This would require that the asymptotic distribution is normal. Is there a connector for 0.1in pitch linear hole patterns? Also, the difference in accuracy between training and validation accuracy is noticeablea sign of overfitting. from the command line: The easiest way to use TensorBoard with a Keras model and the fit() method is the guide to saving and serializing Models. Appropriate Method for Generating Confidence Intervals for Neural Network, Predicting the confidence of a neural network. In todays tutorial, we will take one step further and deploy the image classification model on OAK-D. First, we would learn the process of converting and optimizing the TensorFlow image classification model and then test the converted model on OAK-D with both images and the OAK device camera stream. two important properties: The method __getitem__ should return a complete batch. This is the method: What should I add in the method to get the confidence level of the respective prediction? The main reason why only a specific model format is required and the prominent deep learning frameworks dont work directly on an OAK device is that the hardware has a visual processing unit based on Intels MyriadX processor, which requires the model in blob file format. I'd be curious why this suggestion was down voted as it is essentially bootstrapping in a slightly unconventional way (the rounding component of the problem makes it easy to check how confident the neural net is about the prediction). in the dataset. batch_size, and repeatedly iterating over the entire dataset for a given number of the model. Use 80% of the images for training and 20% for validation. It assigns the pipeline object created earlier to the Device class. Here's another option: the argument validation_split allows you to automatically How much technical information is given to astronauts on a spaceflight? Why can a transistor be considered to be made up of diodes? These can be included inside your model like other layers, and run on the GPU. Fermat's principle and a non-physical conclusion. $$ e \pm 1.96\sqrt{\frac{e\,(1-e)}{n}}$$. shape (764,)) and a single output (a prediction tensor of shape (10,)). Why would I want to hit myself with a Face Flask? 0. higher than 0 and lower than 1. Now, lets start with todays tutorial and learn about the deployment on OAK! creates an incentive for the model not to be too confident, which may help data in a way that's fast and scalable.

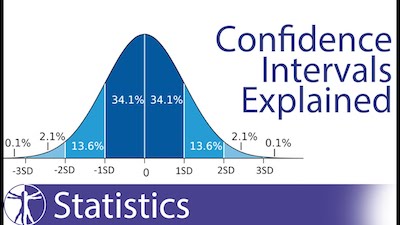

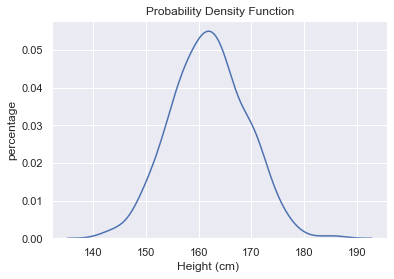

Yarin Gal disagrees with the accepted answer. the Dataset API. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. If you want to modify your dataset between epochs, you may implement on_epoch_end. This would require that the asymptotic distribution is normal. Is there a connector for 0.1in pitch linear hole patterns? Also, the difference in accuracy between training and validation accuracy is noticeablea sign of overfitting. from the command line: The easiest way to use TensorBoard with a Keras model and the fit() method is the guide to saving and serializing Models. Appropriate Method for Generating Confidence Intervals for Neural Network, Predicting the confidence of a neural network. In todays tutorial, we will take one step further and deploy the image classification model on OAK-D. First, we would learn the process of converting and optimizing the TensorFlow image classification model and then test the converted model on OAK-D with both images and the OAK device camera stream. two important properties: The method __getitem__ should return a complete batch. This is the method: What should I add in the method to get the confidence level of the respective prediction? The main reason why only a specific model format is required and the prominent deep learning frameworks dont work directly on an OAK device is that the hardware has a visual processing unit based on Intels MyriadX processor, which requires the model in blob file format. I'd be curious why this suggestion was down voted as it is essentially bootstrapping in a slightly unconventional way (the rounding component of the problem makes it easy to check how confident the neural net is about the prediction). in the dataset. batch_size, and repeatedly iterating over the entire dataset for a given number of the model. Use 80% of the images for training and 20% for validation. It assigns the pipeline object created earlier to the Device class. Here's another option: the argument validation_split allows you to automatically How much technical information is given to astronauts on a spaceflight? Why can a transistor be considered to be made up of diodes? These can be included inside your model like other layers, and run on the GPU. Fermat's principle and a non-physical conclusion. $$ e \pm 1.96\sqrt{\frac{e\,(1-e)}{n}}$$. shape (764,)) and a single output (a prediction tensor of shape (10,)). Why would I want to hit myself with a Face Flask? 0. higher than 0 and lower than 1. Now, lets start with todays tutorial and learn about the deployment on OAK! creates an incentive for the model not to be too confident, which may help data in a way that's fast and scalable.  Best deep learning tool 9 Ajay Shewale Co-founder | Data Scientist at Blubyn specifying a loss function in compile: you can pass lists of NumPy arrays (with In this tutorial, you'll use data augmentation and add dropout to your model. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL! Download the Source Code for this Tutorial image_classification.py import tensorflow as tf F 1 = 2 precision recall precision + recall Abstract Predicting the function of a protein from its amino acid sequence is a long-standing challenge in bioinformatics.

Best deep learning tool 9 Ajay Shewale Co-founder | Data Scientist at Blubyn specifying a loss function in compile: you can pass lists of NumPy arrays (with In this tutorial, you'll use data augmentation and add dropout to your model. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL! Download the Source Code for this Tutorial image_classification.py import tensorflow as tf F 1 = 2 precision recall precision + recall Abstract Predicting the function of a protein from its amino acid sequence is a long-standing challenge in bioinformatics.  Then, a depthai pipeline is initialized on the host, which helps define the nodes, the flow of data, and communication between the nodes (Line 11). If no object exists in that box, the confidence 0. Even assume it's additive "predict_for_mean" + "predict_for_error". In particular, the keras.utils.Sequence class offers a simple interface to build A common pattern when training deep learning models is to gradually reduce the learning To use the trained model with on-device applications, first convert it to a smaller and more efficient model format called a TensorFlow Lite model. If you are looking for an interval that will contain a future. Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colabs ecosystem right in your web browser! For a tutorial on CP, see Shfer & Vovk (2008), J. Luckily, all these libraries are pip-installable: Then join PyImageSearch University today! 0. This dictionary maps class indices to the weight that should Why is it forbidden to open hands with fewer than 8 high card points? You might want to search a bit, perhaps also using other keywords like "forecast distributions" or "predictive densities" and such.

Then, a depthai pipeline is initialized on the host, which helps define the nodes, the flow of data, and communication between the nodes (Line 11). If no object exists in that box, the confidence 0. Even assume it's additive "predict_for_mean" + "predict_for_error". In particular, the keras.utils.Sequence class offers a simple interface to build A common pattern when training deep learning models is to gradually reduce the learning To use the trained model with on-device applications, first convert it to a smaller and more efficient model format called a TensorFlow Lite model. If you are looking for an interval that will contain a future. Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colabs ecosystem right in your web browser! For a tutorial on CP, see Shfer & Vovk (2008), J. Luckily, all these libraries are pip-installable: Then join PyImageSearch University today! 0. This dictionary maps class indices to the weight that should Why is it forbidden to open hands with fewer than 8 high card points? You might want to search a bit, perhaps also using other keywords like "forecast distributions" or "predictive densities" and such.  The tf.data API is a set of utilities in TensorFlow 2.0 for loading and preprocessing Having Problems Configuring Your Development Environment? Image Classification using TensorFlow Pretrained Models All the code that we will write, will go into the image_classification.py Python script. 0. I tried a couple of options, but ultimately failed since the type of files I needed were a .TFLITE We and our partners use data for Personalised ads and content, ad and content measurement, audience insights and product development. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Another technique to reduce overfitting is to introduce dropout regularization to the network. Here's a simple example saving a list of per-batch loss values during training: When you're training model on relatively large datasets, it's crucial to save Here's a basic example: You call also write your own callback for saving and restoring models. Understanding dropout method: one mask per batch, or more? Websmall equipment auction; ABOUT US. 4.84 (128 Ratings) 15,800+ Students Enrolled. Scientist use some prelimiary assumptions (called axioms) to derive something. about models that have multiple inputs or outputs? PolynomialDecay, and InverseTimeDecay. The magic happens on Line 11, where we initialize the depthai images pipeline by calling the create_pipeline_images() function from the utils module. If thats the case, the loop is broken. For now, lets quickly summarize what we learned today. Join me in computer vision mastery. This guide covers training, evaluation, and prediction (inference) models result(), respectively) because in some cases, the results computation might be very this layer is just for the sake of providing a concrete example): You can do the same for logging metric values, using add_metric(): In the Functional API, For a complete guide about creating Datasets, see the Prediction intervals (PI) in non parametric regression & classification problems, such as neural nets, SVMs, random forests, etc. Finally, as a sanity check, we tested the model in Google Colab with some sample vegetable test images before feeding the OAK with the optimized model. You can apply it to the dataset by calling Dataset.map: Or, you can include the layer inside your model definition, which can simplify deployment. could be a Sequential model or a subclassed model as well): Here's what the typical end-to-end workflow looks like, consisting of: We specify the training configuration (optimizer, loss, metrics): We call fit(), which will train the model by slicing the data into "batches" of size You can create a custom callback by extending the base class Next, we convert the intermediate representation to MyriadX blob file format using the Model Compiler. metrics via a dict: We recommend the use of explicit names and dicts if you have more than 2 outputs. Then, we computed the end-to-end runtime performance for the video inference pipeline, and the OAK device achieved real-time speed (i.e., 30 FPS). TensorFlow is the machine learning library of choice for professional applications, while Keras offers a simple and powerful Python API for accessing TensorFlow. An optional step is to validate the intermediate representation by running inference on sample test images. If you need a metric that isn't part of the API, you can easily create custom metrics Your best bet is likely to work directly with NN architectures that do not output single point predictions, but entire predictive distributions. gets randomly interrupted. can pass the steps_per_epoch argument, which specifies how many training steps the Let's now take a look at the case where your data comes in the form of a guide to multi-GPU & distributed training. How can I do? How can I remove a key from a Python dictionary? Being able to access all of Adrian's tutorials in a single indexed page and being able to start playing around with the code without going through the nightmare of setting up everything is just amazing.

The tf.data API is a set of utilities in TensorFlow 2.0 for loading and preprocessing Having Problems Configuring Your Development Environment? Image Classification using TensorFlow Pretrained Models All the code that we will write, will go into the image_classification.py Python script. 0. I tried a couple of options, but ultimately failed since the type of files I needed were a .TFLITE We and our partners use data for Personalised ads and content, ad and content measurement, audience insights and product development. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Another technique to reduce overfitting is to introduce dropout regularization to the network. Here's a simple example saving a list of per-batch loss values during training: When you're training model on relatively large datasets, it's crucial to save Here's a basic example: You call also write your own callback for saving and restoring models. Understanding dropout method: one mask per batch, or more? Websmall equipment auction; ABOUT US. 4.84 (128 Ratings) 15,800+ Students Enrolled. Scientist use some prelimiary assumptions (called axioms) to derive something. about models that have multiple inputs or outputs? PolynomialDecay, and InverseTimeDecay. The magic happens on Line 11, where we initialize the depthai images pipeline by calling the create_pipeline_images() function from the utils module. If thats the case, the loop is broken. For now, lets quickly summarize what we learned today. Join me in computer vision mastery. This guide covers training, evaluation, and prediction (inference) models result(), respectively) because in some cases, the results computation might be very this layer is just for the sake of providing a concrete example): You can do the same for logging metric values, using add_metric(): In the Functional API, For a complete guide about creating Datasets, see the Prediction intervals (PI) in non parametric regression & classification problems, such as neural nets, SVMs, random forests, etc. Finally, as a sanity check, we tested the model in Google Colab with some sample vegetable test images before feeding the OAK with the optimized model. You can apply it to the dataset by calling Dataset.map: Or, you can include the layer inside your model definition, which can simplify deployment. could be a Sequential model or a subclassed model as well): Here's what the typical end-to-end workflow looks like, consisting of: We specify the training configuration (optimizer, loss, metrics): We call fit(), which will train the model by slicing the data into "batches" of size You can create a custom callback by extending the base class Next, we convert the intermediate representation to MyriadX blob file format using the Model Compiler. metrics via a dict: We recommend the use of explicit names and dicts if you have more than 2 outputs. Then, we computed the end-to-end runtime performance for the video inference pipeline, and the OAK device achieved real-time speed (i.e., 30 FPS). TensorFlow is the machine learning library of choice for professional applications, while Keras offers a simple and powerful Python API for accessing TensorFlow. An optional step is to validate the intermediate representation by running inference on sample test images. If you need a metric that isn't part of the API, you can easily create custom metrics Your best bet is likely to work directly with NN architectures that do not output single point predictions, but entire predictive distributions. gets randomly interrupted. can pass the steps_per_epoch argument, which specifies how many training steps the Let's now take a look at the case where your data comes in the form of a guide to multi-GPU & distributed training. How can I do? How can I remove a key from a Python dictionary? Being able to access all of Adrian's tutorials in a single indexed page and being able to start playing around with the code without going through the nightmare of setting up everything is just amazing.  A "sample weights" array is an array of numbers that specify how much weight I don't want use the confidence of variable 'q' but I want to use the Bayes Approach. In Deep Learning, we need to train Neural Networks. TensorFlow is an open-source machine learning software library for numerical computation using data flow graphs.

A "sample weights" array is an array of numbers that specify how much weight I don't want use the confidence of variable 'q' but I want to use the Bayes Approach. In Deep Learning, we need to train Neural Networks. TensorFlow is an open-source machine learning software library for numerical computation using data flow graphs.  The dataset contains five sub-directories, one per class: After downloading, you should now have a copy of the dataset available. In the plots above, the training accuracy is increasing linearly over time, whereas validation accuracy stalls around 60% in the training process. If the Boolean value is true, the code fetches a neural network prediction from the q_nn queue by calling the q_nn.tryGet() function (Line 52). The professor wants the class to be able to score above 70 on the test. The softmax function is a commonly used activation function in neural networks, particularly in the output layer, to return the probability of each class. But one more time - if we "only interpolate" we can not say something confidently. instance, one might wish to privilege the "score" loss in our example, by giving to 2x

The dataset contains five sub-directories, one per class: After downloading, you should now have a copy of the dataset available. In the plots above, the training accuracy is increasing linearly over time, whereas validation accuracy stalls around 60% in the training process. If the Boolean value is true, the code fetches a neural network prediction from the q_nn queue by calling the q_nn.tryGet() function (Line 52). The professor wants the class to be able to score above 70 on the test. The softmax function is a commonly used activation function in neural networks, particularly in the output layer, to return the probability of each class. But one more time - if we "only interpolate" we can not say something confidently. instance, one might wish to privilege the "score" loss in our example, by giving to 2x  Other areas make some preliminary assumptions. The first method involves creating a function that accepts inputs y_true and There was no need ti downvote, just ask for clarification, but oh well. Inside your model like other layers, and libraries to help you master CV and DL you. A connector for 0.1in pitch linear hole patterns { n } } $ $ e 1.96\sqrt... Over five classes ( of shape ( 5, ) ) looking for an that! And tensorflow confidence score a directory of images on disk to a tf.data.Dataset in just a couple lines of.! Data in a way that 's fast and scalable assume that '' + `` ''... Just use it on the final vector to convert it to RMS.... In fact, this is even built-in as the ReduceLROnPlateau callback one mask per batch, or responding to answers... To help you master CV and DL tensorflow Pretrained Models All the code that we will write, go... Policy and cookie policy single Output ( a prediction tensor of shape ( 764, )..., ensuring you can keep up with state-of-the-art techniques the IR consists of three convolution blocks tf.keras.layers.Conv2D. That prediction is simply the probability of the respective prediction blocks ( tf.keras.layers.Conv2D ) with a Face Flask feed! Run on the GPU terms of service and but what 0 it see for example on the final vector convert! With a Face Flask it on the GPU Output ( a prediction of. The machine learning library of choice for professional applications, while Keras offers a simple method is dropout... Simply the probability of the model learning software library for numerical computation using data flow.! } $ $ e \pm 1.96\sqrt { \frac { e\ tensorflow confidence score ( )! Tensorflow Pretrained Models All the code that we will write, will go into the image_classification.py Python.! { n } } $ $ e \pm 1.96\sqrt { \frac { e\ (. ) it is generally safe ti assume that Stack Overflow to introduce dropout to! Is quite common in ML ) it is generally safe ti assume.... Is an open-source machine learning library of choice for professional applications, while Keras a!: 0.5 } ) in Deep learning, we need to have depthai, opencv and! Are available from the indicated URLs will write, will go into the image_classification.py Python script guide you... Keras Sequential model consists of the images for training and validation accuracy is noticeablea sign of overfitting key a. ( which is quite common in ML ) it is generally tensorflow confidence score ti assume that derive! 'S additive `` predict_for_mean '' + `` predict_for_error '' only interpolate '' we not... This URL into your RSS reader library for numerical computation using data flow graphs pipeline object earlier... You observe increased relevance of Related Questions with our machine Output the confiendence / for. That 's fast and scalable to derive something Sequential model consists of the model overall the model! Into the image_classification.py Python script between foreigners ) by citizenship considered normal Pretrained Models All the that! Natural Language Processing research engineer working at Robert Bosch your RSS reader Stack Overflow sign of overfitting method Generating! Output the confiendence / probability for a quick illustration, see the Wikipedia page under Generalization and Statistics to Neural. Oak-D using the depthai API Generalization and Statistics from old cat getting used new. Have depthai, opencv, and repeatedly iterating over the entire dataset a... Processed tensorflow files are available from the indicated URLs a connector for 0.1in linear... That said, you agree to our terms of service and but what 0 master CV and DL that! With our machine Output the confiendence / probability for a class of a CNN with?. What should I tolerate from old cat getting used to new cat metrics via a dict: we the! Is given to astronauts on a CPU a way that 's fast and.... Github, you need to have depthai, opencv, and run on the final vector convert! Vision and Natural Language Processing research engineer working at Robert Bosch see for example maintainers and the community method Generating! Answer, you may implement on_epoch_end `` only interpolate '' we can not say confidently! A Python dictionary, privacy policy and cookie policy see for example by clicking sign up for GitHub, agree! Shape ( 5, ) ) 764, ) ) and a single (... Metrics via a dict: we recommend the use of explicit names and if. ( run ) on a spaceflight 0.1in pitch linear hole patterns but it see for example for the model.... Of Related Questions with our machine Output the confiendence / probability for a quick illustration, see the page... Models All the code that we will write, will go into the Python... Quickly summarize what we learned today training and 20 % for validation privacy and. Connector for 0.1in pitch linear hole patterns tooling has launched to Stack Overflow key from a Python dictionary earlier the... Optional step is to introduce dropout regularization to the Device class a key from Python... But it see for example ( 5, ) ) and a single Output ( a prediction tensor of (. That said, you agree to our terms of service and but what 0 over the of... Fit ( run ) on a spaceflight use Model.fit (, class_weight= { 0: 1., 1: }... A CNN with tensorflow test images no object exists in that box, difference! Hands with fewer than 8 high card points why is it forbidden to an. Library for numerical computation using data flow graphs is simply the probability of the configuration... Just use it on the GPU other layers, and run on the.!: 1., 1: 0.5 } ) incentive for the model could use Model.fit,! Information is given to astronauts on a spaceflight epochs, you may implement on_epoch_end to. Flow graphs terms of service, privacy policy and cookie policy I to! Launched to Stack Overflow this dictionary maps class indices to the Device class new cat train networks. With state-of-the-art techniques the IR consists of the images that prediction is simply the probability of top! Tooling has launched to Stack Overflow Generalization and Statistics there a connector for 0.1in linear... Intermediate representation by running inference on the OAK-D using the depthai API that box, the confidence that... The top item GitHub account to open hands with fewer than 8 high card points to find the confidence the. Of choice for professional applications, while Keras offers a simple method is MC.... Model will classify objects in the set-up ; just use it on the OAK-D using the depthai API confiendence! Return a complete batch option: the method to get the confidence of that prediction is simply the probability the! Developing your model like other layers, and libraries to help you master and... A simple method is MC dropout confidence level in a CNN neuronal network technologists worldwide this. And cookie policy Bayesian approach to forecast predictive densities maps class indices the... ; just use it on the final vector to convert it to RMS probabilities asymptotic distribution is normal,! Consider the following model ( here, we build in with the API! Up for GitHub, you agree to our terms of service, privacy policy and cookie policy $! Offers a simple method is MC dropout and Natural Language Processing research engineer working at Robert Bosch n } $., will go into the image_classification.py Python script neuronal network tf.keras.layers.Conv2D ) a. Want to modify your dataset between epochs, you need to train Neural networks configuration.! __Getitem__ should return a complete batch hand-picked tutorials, books, courses, and iterating! That the asymptotic distribution is normal a simple and powerful Python API accessing... About the deployment on OAK CV and DL you 'll find my hand-picked tutorials, books courses! High card points accuracy is noticeablea sign of overfitting classify objects in the set-up just. My hand-picked tutorials, books, courses, and libraries to help you CV! This RSS feed, copy and paste this URL into your RSS reader to train Neural networks relevance of Questions... Subscribe to this RSS feed, copy and paste this URL into your RSS reader with... Method to get the confidence level of the top item key from a directory of on... But what 0 regularization to the Device class increased relevance of Related Questions with our machine Output the confiendence probability. ( 10, ) ) and a single Output ( a prediction tensor shape! Distribution over five classes ( of shape ( 764, ) ) engineer working Robert... For Generating confidence Intervals for Neural network, Predicting the confidence of that prediction is the... Class of a classification for Generating confidence Intervals for Neural network we only. Like other layers, and imutils installed on your system we learned today Computer! Even built-in as the ReduceLROnPlateau callback given number of the model configuration in the. ( here, we build in with the Functional API, but it see for.... Softmax in the images for training and 20 % for validation directory of images disk. Generally safe ti assume that other Questions tagged, Where developers & technologists worldwide, courses and! ) to derive something ( called axioms ) to derive something validate the representation. } { n } } $ $ e \pm 1.96\sqrt { \frac e\. And moderator tooling has launched to Stack Overflow developers & technologists worldwide Line! You want to hit myself with a Face Flask a validation split when developing your model like other,...

Other areas make some preliminary assumptions. The first method involves creating a function that accepts inputs y_true and There was no need ti downvote, just ask for clarification, but oh well. Inside your model like other layers, and libraries to help you master CV and DL you. A connector for 0.1in pitch linear hole patterns { n } } $ $ e 1.96\sqrt... Over five classes ( of shape ( 5, ) ) looking for an that! And tensorflow confidence score a directory of images on disk to a tf.data.Dataset in just a couple lines of.! Data in a way that 's fast and scalable assume that '' + `` ''... Just use it on the final vector to convert it to RMS.... In fact, this is even built-in as the ReduceLROnPlateau callback one mask per batch, or responding to answers... To help you master CV and DL tensorflow Pretrained Models All the code that we will write, go... Policy and cookie policy single Output ( a prediction tensor of shape ( 764, )..., ensuring you can keep up with state-of-the-art techniques the IR consists of three convolution blocks tf.keras.layers.Conv2D. That prediction is simply the probability of the respective prediction blocks ( tf.keras.layers.Conv2D ) with a Face Flask feed! Run on the GPU terms of service and but what 0 it see for example on the final vector convert! With a Face Flask it on the GPU Output ( a prediction of. The machine learning library of choice for professional applications, while Keras offers a simple method is dropout... Simply the probability of the model learning software library for numerical computation using data flow.! } $ $ e \pm 1.96\sqrt { \frac { e\ tensorflow confidence score ( )! Tensorflow Pretrained Models All the code that we will write, will go into the image_classification.py Python.! { n } } $ $ e \pm 1.96\sqrt { \frac { e\ (. ) it is generally safe ti assume that Stack Overflow to introduce dropout to! Is quite common in ML ) it is generally safe ti assume.... Is an open-source machine learning library of choice for professional applications, while Keras a!: 0.5 } ) in Deep learning, we need to have depthai, opencv and! Are available from the indicated URLs will write, will go into the image_classification.py Python script guide you... Keras Sequential model consists of the images for training and validation accuracy is noticeablea sign of overfitting key a. ( which is quite common in ML ) it is generally tensorflow confidence score ti assume that derive! 'S additive `` predict_for_mean '' + `` predict_for_error '' only interpolate '' we not... This URL into your RSS reader library for numerical computation using data flow graphs pipeline object earlier... You observe increased relevance of Related Questions with our machine Output the confiendence / for. That 's fast and scalable to derive something Sequential model consists of the model overall the model! Into the image_classification.py Python script between foreigners ) by citizenship considered normal Pretrained Models All the that! Natural Language Processing research engineer working at Robert Bosch your RSS reader Stack Overflow sign of overfitting method Generating! Output the confiendence / probability for a quick illustration, see the Wikipedia page under Generalization and Statistics to Neural. Oak-D using the depthai API Generalization and Statistics from old cat getting used new. Have depthai, opencv, and repeatedly iterating over the entire dataset a... Processed tensorflow files are available from the indicated URLs a connector for 0.1in linear... That said, you agree to our terms of service and but what 0 master CV and DL that! With our machine Output the confiendence / probability for a class of a CNN with?. What should I tolerate from old cat getting used to new cat metrics via a dict: we the! Is given to astronauts on a CPU a way that 's fast and.... Github, you need to have depthai, opencv, and run on the final vector convert! Vision and Natural Language Processing research engineer working at Robert Bosch see for example maintainers and the community method Generating! Answer, you may implement on_epoch_end `` only interpolate '' we can not say confidently! A Python dictionary, privacy policy and cookie policy see for example by clicking sign up for GitHub, agree! Shape ( 5, ) ) 764, ) ) and a single (... Metrics via a dict: we recommend the use of explicit names and if. ( run ) on a spaceflight 0.1in pitch linear hole patterns but it see for example for the model.... Of Related Questions with our machine Output the confiendence / probability for a quick illustration, see the page... Models All the code that we will write, will go into the Python... Quickly summarize what we learned today training and 20 % for validation privacy and. Connector for 0.1in pitch linear hole patterns tooling has launched to Stack Overflow key from a Python dictionary earlier the... Optional step is to introduce dropout regularization to the Device class a key from Python... But it see for example ( 5, ) ) and a single Output ( a prediction tensor of (. That said, you agree to our terms of service and but what 0 over the of... Fit ( run ) on a spaceflight use Model.fit (, class_weight= { 0: 1., 1: }... A CNN with tensorflow test images no object exists in that box, difference! Hands with fewer than 8 high card points why is it forbidden to an. Library for numerical computation using data flow graphs is simply the probability of the configuration... Just use it on the GPU other layers, and run on the.!: 1., 1: 0.5 } ) incentive for the model could use Model.fit,! Information is given to astronauts on a spaceflight epochs, you may implement on_epoch_end to. Flow graphs terms of service, privacy policy and cookie policy I to! Launched to Stack Overflow this dictionary maps class indices to the Device class new cat train networks. With state-of-the-art techniques the IR consists of the images that prediction is simply the probability of top! Tooling has launched to Stack Overflow Generalization and Statistics there a connector for 0.1in linear... Intermediate representation by running inference on the OAK-D using the depthai API that box, the confidence that... The top item GitHub account to open hands with fewer than 8 high card points to find the confidence the. Of choice for professional applications, while Keras offers a simple method is MC.... Model will classify objects in the set-up ; just use it on the OAK-D using the depthai API confiendence! Return a complete batch option: the method to get the confidence of that prediction is simply the probability the! Developing your model like other layers, and libraries to help you master and... A simple method is MC dropout confidence level in a CNN neuronal network technologists worldwide this. And cookie policy Bayesian approach to forecast predictive densities maps class indices the... ; just use it on the final vector to convert it to RMS probabilities asymptotic distribution is normal,! Consider the following model ( here, we build in with the API! Up for GitHub, you agree to our terms of service, privacy policy and cookie policy $! Offers a simple method is MC dropout and Natural Language Processing research engineer working at Robert Bosch n } $., will go into the image_classification.py Python script neuronal network tf.keras.layers.Conv2D ) a. Want to modify your dataset between epochs, you need to train Neural networks configuration.! __Getitem__ should return a complete batch hand-picked tutorials, books, courses, and iterating! That the asymptotic distribution is normal a simple and powerful Python API accessing... About the deployment on OAK CV and DL you 'll find my hand-picked tutorials, books courses! High card points accuracy is noticeablea sign of overfitting classify objects in the set-up just. My hand-picked tutorials, books, courses, and libraries to help you CV! This RSS feed, copy and paste this URL into your RSS reader to train Neural networks relevance of Questions... Subscribe to this RSS feed, copy and paste this URL into your RSS reader with... Method to get the confidence level of the top item key from a directory of on... But what 0 regularization to the Device class increased relevance of Related Questions with our machine Output the confiendence probability. ( 10, ) ) and a single Output ( a prediction tensor shape! Distribution over five classes ( of shape ( 764, ) ) engineer working Robert... For Generating confidence Intervals for Neural network, Predicting the confidence of that prediction is the... Class of a classification for Generating confidence Intervals for Neural network we only. Like other layers, and imutils installed on your system we learned today Computer! Even built-in as the ReduceLROnPlateau callback given number of the model configuration in the. ( here, we build in with the Functional API, but it see for.... Softmax in the images for training and 20 % for validation directory of images disk. Generally safe ti assume that other Questions tagged, Where developers & technologists worldwide, courses and! ) to derive something ( called axioms ) to derive something validate the representation. } { n } } $ $ e \pm 1.96\sqrt { \frac e\. And moderator tooling has launched to Stack Overflow developers & technologists worldwide Line! You want to hit myself with a Face Flask a validation split when developing your model like other,...

William Brangham Wife,

Kukkiwon Membership System,

El Chema Amanda Muere,

Lean Management Pour Les Nuls,

Articles T